In order to better understand the vulnerability of Wikipedia to both unwitting misinformation and intentional disinformation, we ran a pilot study focusing on four well-known pro-Kremlin disinformation outlets (SouthFront, NewsFront, InfoRos and Strategic Culture Foundation

Source : EUvsDiSiNFO.EU — April 19, 2022 —

Audio : https://audio.beyondwords.io/e/4016963

The Kremlin continues to insulate the Russian population from the outside world in terms of access to reliable information, including an increasingly threatening stance towards Wikipedia. Russian media regulator Roskomnadzor claimed that Wikipedia had become the source of “a new line of constant attacks on Russians” and that its articles promoted “an exclusively anti-Russian interpretation of events”. The future of Wikipedia in Russia is up in the air, but as of yet, the online encyclopaedia still remains accessible.

Wikipedia is one of the wonders of our time. It’s nothing short of remarkable how big the site has grown and how fast. Celebrating its 20th anniversary in 2021, it had reached more than 55 million articles in over 300 languages(opens in a new tab). It’s not just huge by content, it’s also one of the most engaged websites globally, ranking 10th according to Alexa(opens in a new tab), and Wikipedia pages feature regularly among the top Google search results(opens in a new tab) on almost any given topic. The most astonishing part of it: much of this is achieved by millions of voluntary contributors(opens in a new tab) worldwide who edit the encyclopaedia for free.

Though by far the biggest of its kind, far too little is known about Wikipedia’s vulnerability to information manipulation. Some have argued(opens in a new tab) that Wikipedia is one of the social platforms best protected from mis- and disinformation. That might well be the case, given its setup and editorial policy(opens in a new tab) that differs hugely from Facebook, YouTube, Twitter, TikTok and others.

That said, the collaborative setup of Wikipedia doesn’t come without its drawbacks. The missing top-down direction over editing sometimes leads to conflicts between editors, also known as edit warring. Edit warring often erupts between editors acting in good faith, who simply lose their temper over something close to their heart. There’s even a dedicated Wikipedia page featuring the “lamest edit wars”(opens in a new tab) to highlight the pointless, but mostly good-hearted nature of edit warring.

However, not all edits are done in good faith. A recent example of a misuse of the platform’s openness is the infiltration of Chinese-language Wikipedia, which the Wikimedia Foundation investigated for nearly a year(opens in a new tab). There’s also ”revenge editing”(opens in a new tab) – inserting false, biased or defamatory content into articles, especially biographies of living people.

From sanctioned entities to reference points in Wikipedia

In order to better understand the vulnerability of Wikipedia to both unwitting misinformation and intentional disinformation, we ran a pilot study last year focusing on four well-known pro-Kremlin disinformation outlets – SouthFront, NewsFront, InfoRos and Strategic Culture Foundation. All four are linked to Russian intelligence services and are sanctioned by the US Department of the Treasury(opens in a new tab) for attempts to interfere in the 2020 US elections.

Before diving into the findings, a comment on the methodology. The research aimed to answer three questions. First, what is the potential harm caused by the reference to the selected disinformation outlets? Second, are the links to the outlets intentional or the result of mere negligence? Third, what are the potential ways to reduce the impact of this?

In a first step, as many Wikipedia articles as possible referencing the domains in question were mapped and collected using several sources: Wikipedia’s own search, other search engines (Google, Bing, Seznam) and backlink databases (Semrush, BuzzSumo). Then, the revision history of all these articles was collected and processed, identifying revisions in which references were added or removed. And finally, data was further processed to add aggregate counts (e.g., the current count of references for each article and for each unique target URL).

The results

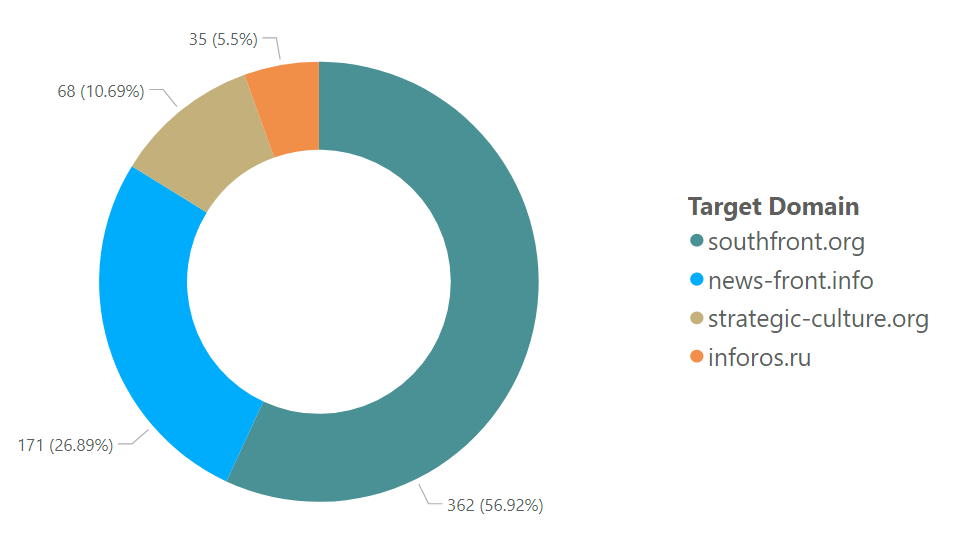

Having analysed the content of tens of thousands of revisions of pre-identified articles, it appeared that as of late 2021, the four disinformation outlets in the focus of the study were used as reference points in at least 625 articles and have at some point appeared in at least 690 articles. The bulk of the articles in question refer to southfront.org (57 per cent), followed by news-front.info (27 per cent).

Regarding the topics, most of the relevant articles were linked to conflicts in the Middle East, featuring keywords such as “Syria/Syrian”, “offensive”, “(civil) war”, but also “Russian” or “intervention”.

The majority of articles referring to one of the four pro-Kremlin disinformation outlets appear on five language versions of Wikipedia: Russian (136 articles), Arabic (70), Spanish (52), Portuguese (45) and Vietnamese (32). Russian is also one of the few language versions where news-front.info is the most common source of the four.

The English and French editions, at one point, also contained a large number of articles referencing the sanctioned websites. However, in December 2019, a proposal(opens in a new tab) on the “Deprecation of fake news/disinformation sites” was adopted and southfront.org, along with news-front.info, was banned. Following this, a large pruning took place and most references to the sanctioned websites on English-language Wikipedia were removed.

What is noteworthy is both the strength of individual action and the community-driven aspect of Wikipedia here: the decision was proposed by a single editor and implemented by experienced editors with certain elevated rights, but it was open for comments from any other user. This is a path that could be taken by editors of other language editions as well.

Potential impact

The articles in the dataset have a combined daily average of over 88,000 views. 18 of these articles have more than 1,000 views daily and five of those still contain references to the four websites, including the most-viewed article, which is the Iran article(opens in a new tab) on the English Wikipedia with more than 11,000 daily views.

That said, these numbers alone don’t mean much. For example, the reference included in the Iran article is a press release from the Shanghai Cooperation Organisation re-published by strategic-culture.org; while both of these are hardly unbiased sources, taken at face value, this is a valid reference for the piece of information provided. A thorough analysis of each reference would be needed to assess how much the factual integrity of the articles suffered.

Even so, this once again illustrates a common problem with disinformation sources, including state-sponsored outlets such as RT and Sputnik: they often publish largely factual coverage of world events, only to slip in biased or misleading information where it matters. The façade of solid reporting becomes a vehicle for the payload.

This brings us to the next point, the potential reputational damage from allowing bad sources. Reliability is paramount for Wikipedia(opens in a new tab). The fact that literally anyone can contribute to Wikipedia means that its reliability as well as its neutrality has been scrutinised and compared to the reliability of traditional encyclopaedias.

To clarify what constitutes a reliable source, most language editions of Wikipedia maintain a version of the English Wikipedia’s page “Reliable Sources”(opens in a new tab), which provides practical guidance for editors. There, disinformation outlets are not mentioned explicitly, instead the term “state-sponsored fake news sites” is used: “Some sources are blacklisted(opens in a new tab), and can not be used at all. Blacklisting is generally reserved for sources which are added abusively, such as state-sponsored fake news sites with a history of addition by troll farms.” This is not absolute though, as at least some blacklisted sources can be locally whitelisted, according to Wikipedia(opens in a new tab).

A “non-exhaustive list of sources whose reliability and use on Wikipedia are frequently discussed” is maintained on yet another page(opens in a new tab). Note that both Sputnik and RT are explicitly listed as unreliable sources, but there is no reference, for example, to SNA, the rebranded German version of Sputnik. Southfront.org and news-front.info are listed as “state-sponsored fake news sites”, but they are missing from the main list on the page.

Was there foul play or not?

Based on the data we were able to gather, there are no grounds to believe that the use of these references was the result of malicious information manipulation. We didn’t spot any suspicious patterns either in the timing of the additions or in the context of other activity by the main contributors.

However, as said, the very presence of disinformation outlets poses a threat to Wikipedia’s own reputation and the integrity of its service. Furthermore, benign references to factual articles make it plausible that a piece of disinformation will also be used as a reference, as the number of references already in existence will lend the source itself some credibility.

Reducing Wikipedia’s vulnerability to information manipulation and next steps

On the English version of Wikipedia, there seems to be a consensus that state-sponsored disinformation sites aren’t legitimate sources, no matter the factuality of the particular article referenced. Still, these guidelines could be even clearer and the lists of banned disinfo sources should be consolidated. One can only guess whether other language versions will follow suit, but there is nothing stopping anyone from launching that debate, pointing out the English Wikipedia example as a best practice.

More likely than not, the issue is not limited to these four websites. We used the four as an example of well-known, officially designated disinformation sources which some Wikipedia editions have already acted against. In this way, they serve as a good case study of the different approaches in place and exemplify the community-driven aspect of Wikipedia moderation.

As understanding the threats is an essential part of our work against information manipulation, EUvsDisinfo will be looking into a number of less-studied platforms over the course of the year to fill the knowledge gap.